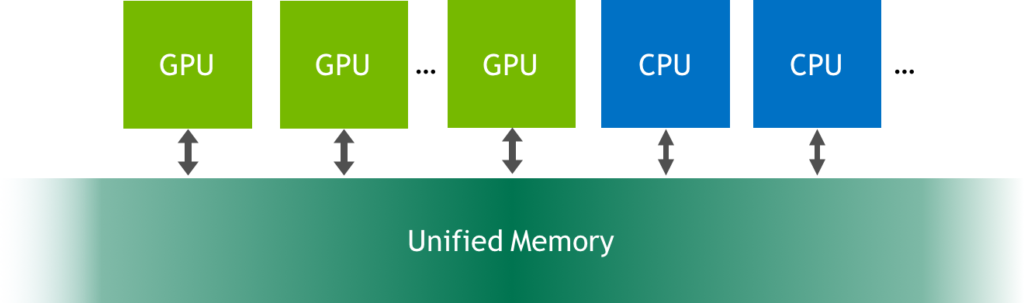

cuda - Splitting an array on a multi-GPU system and transferring the data across the different GPUs - Stack Overflow

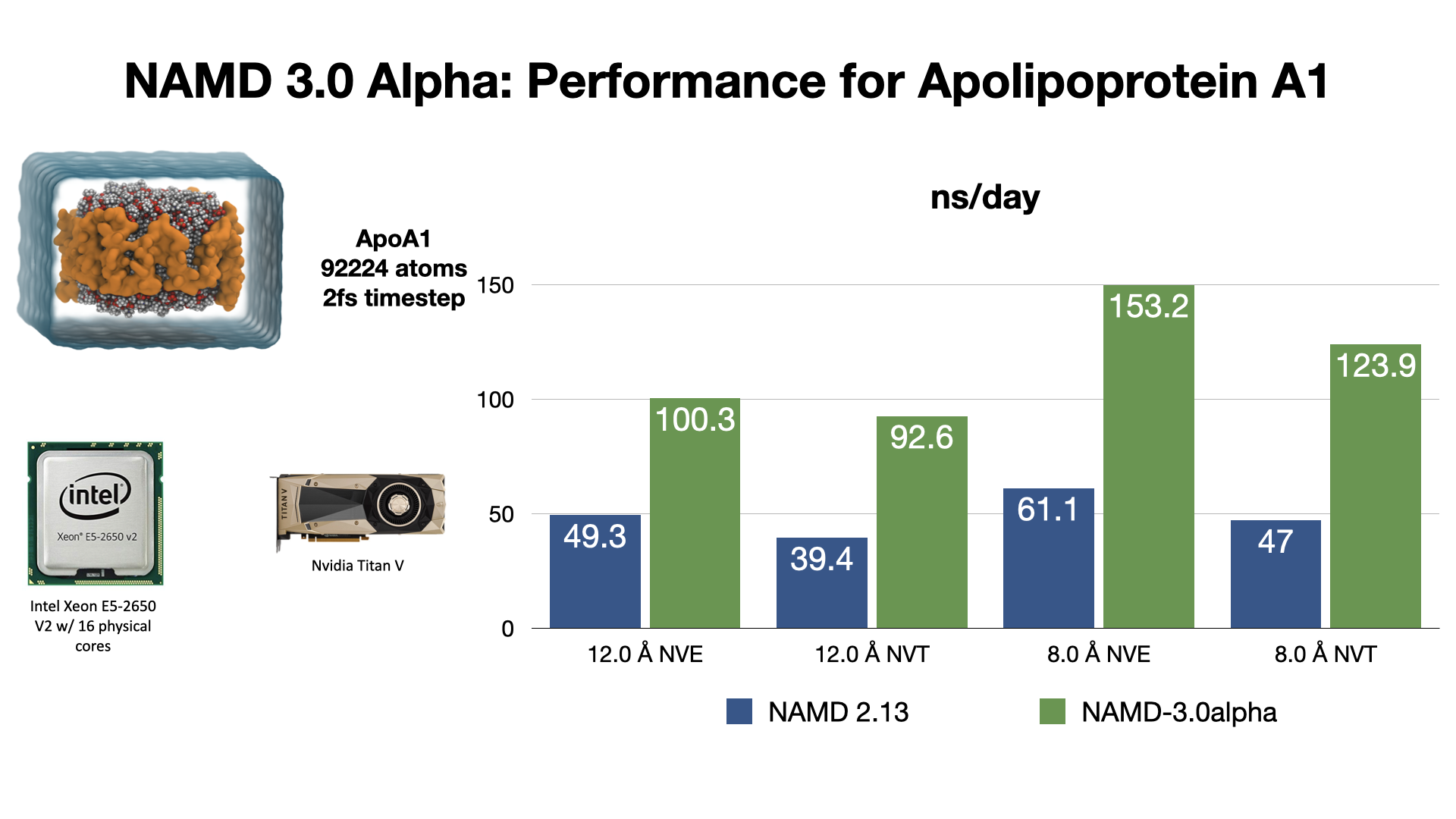

How the hell are GPUs so fast? A HPC walk along Nvidia CUDA-GPU architectures. From zero to nowadays. | by Adrian PD | Towards Data Science